8. HOW HAS SOFTWARE ENGINEERING CHANGED?

We have compared the building of software to the building of a house. Each year, hundreds of houses are built across the country, and satisfied customers move in. Each year, hundreds of software products are built by developers, but customers are too often un happy with the result. Why is there a difference? If it is so easy to enumerate the steps in the development of a system, why are we as software engineers having such a difficult time producing quality software?

Think back to our house-building example. During the building process, the How ells continually reviewed the plans. They also had many opportunities to change their minds about what they wanted. In the same way, software development allows the customer to review the plans at every step and to make changes in the design. After all, if the developer produces a marvelous product that does not meet the customer’s needs, the resultant system will have wasted everyone’s time and effort.

For this reason, it is essential that our software engineering tools and techniques be used with an eye toward flexibility. In the past, we as developers assumed that our customers knew from the start what they wanted. That stability is not usually the case. As the various stages of a project unfold, constraints arise that were not anticipated at the beginning. For instance, after having chosen hardware and software to use for a particular project, we may find that a change in the customer requirements makes it difficult to use a particular database management system to produce menus exactly as promised to the customer. Or we may find that another system with which ours is to interface has changed its procedure or the format of the expected data. We may even find that hard ware or software does not work quite as the vendor’s documentation had promised. Thus, we must remember that each project is unique and that tools and techniques must be chosen that reflect the constraints placed on the individual project.

We must also acknowledge that most systems do not stand by themselves. They interface with other systems, either to receive or to provide information. Developing such systems is complex simply because they require a great deal of coordination with the systems with which they communicate. This complexity is especially true of systems that are being developed concurrently. In the past, developers had difficulty assuring the accuracy and completeness of the documentation of interfaces among systems. In subsequent sections, we will address the issue of controlling the interface problem.

The Nature of the Change

These problems are among many that affect the success of our software development projects. Whatever approach we take, we must look both backward and forward. That is, we must look back at previous development projects to see what we have learned, not only about assuring software quality, but also about the effectiveness of our techniques and tools. And we must look ahead to the way software development and the use of software products are likely to change our practices in the future. Wasserman (1995) points out that these changes since the 1970s have been dramatic. For example, early applications were intended to run on a single processor, usually a mainframe. The input was linear, usually a deck of cards or an input tape, and the output was alphanumeric. The system was designed in one of two basic ways: as a transformation, where input was converted to output, or as a transaction, where input determined which function would be performed. Today’s software-based systems are far different and more complex. Typically, they run on multiple systems, sometimes configured in a client-server architecture with distributed functionality. Software performs not only the primary functions that the user needs, but also network control, security, user-interface presentation and processing, and data or object management. The traditional “waterfall” approach to development, which assumes a linear progression of development activities, where one begins only when its predecessor is complete (and which we'll cover in Section 2), is no longer flexible or suitable for today’s systems.

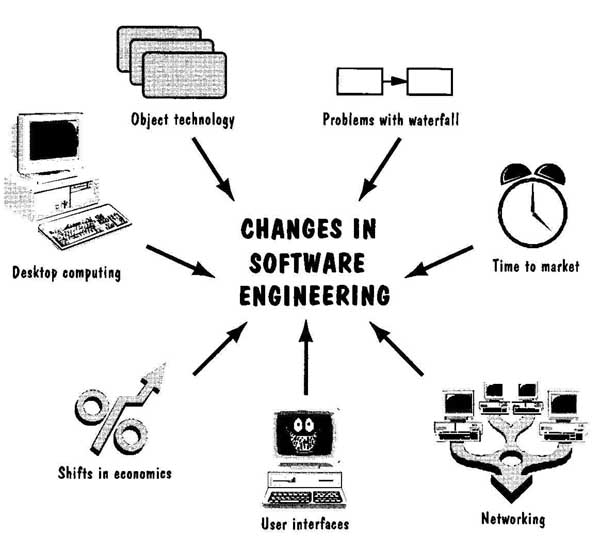

In his Stevens lecture, Wasserman (1996) summarized these changes by identifying seven key factors that have altered software engineering practice, illustrated in FIG. 12:

1. criticality of time-to-market for commercial products

2. shifts in the economies of computing: lower hardware costs and greater development and maintenance costs

3. availability of powerful desktop computing

4. extensive local- and wide-area networking

5. availability and adoption of object-oriented technology

6. graphical user interfaces using windows, icons, menus, and pointers

7. unpredictability of the waterfall model of software development

FIG. 12 The key factors that have changed software development.

For example, the pressures of the marketplace mean that businesses must ready their new products and services before their competitors do; otherwise, the viability of the business itself may be at stake. So traditional techniques for review and testing cannot be used if they require large investments of time that are not recouped as reduced fault or failure rates. Similarly, time previously spent in optimizing code to improve speed or re duce space may no longer be a wise investment; an additional disk or memory card may be a far cheaper solution to the problem.

Moreover, desktop computing puts development power in the hands of users, who now use their systems to develop spreadsheet and database applications, small programs, and even specialized user interfaces and simulations. This shift of development responsibility means that we, as software engineers, are likely to be building more complex systems than before. Similarly, the vast networking capabilities available to most users and developers make it easier for users to find information without special applications. For instance, searching the World Wide Web is quick, easy, and effective; the user no longer needs to write a database application to find what he or she needs.

Developers now find their jobs enhanced, too. Object-oriented technology, coupled with networks and reuse repositories, makes available to developers a large collection of reusable modules for immediate and speedy inclusion in new applications. And graphical user interfaces, often developed with a specialized tool, help put a friendly face on complicated applications. Because we have become sophisticated in the way we analyze problems, we can now partition a system so we develop its subsystems in parallel, requiring a development process very different from the waterfall model. We will see in Section 2 that we have many choices for this process, including some that allow us to build prototypes (to verify with customers and users that the requirements are correct, and to assess the feasibility of designs) and iterate among activities. These steps help us to ensure that our requirements and designs are as fault-free as possible before we instantiate them in code.

Wasserman’s Discipline of Software Engineering

Wasserman (1996) points out that any one of the seven technological changes would have a significant effect on the software development process. But taken together, they have transformed the way we work. In his presentations, DeMarco describes this radical shift by saying that we solved the easy problems first; that means that the set of problems left to be solved is much harder now than it was before. Wasserman addresses this challenge by suggesting that there are eight fundamental notions in software engineering that form the basis for an effective discipline of software engineering. We introduce them briefly here, and we return to them in later sections to see where and how they apply to what we do.

Abstraction. Sometimes, looking at a problem in its “natural state” (i.e., as ex pressed by the customer or user) is a daunting task. We cannot see an obvious way to tackle the problem in an effective or even feasible way. An abstraction is a description of the problem at some level of generalization that allows us to concentrate on the key aspects of the problem without getting mired in the details. This notion is different from a transformation, where we translate the problem to another environment that we understand better; transformation is often used to move a problem from the real world to the mathematical world, so we can manipulate numbers to solve the problem.

Typically, abstraction involves identifying classes of objects that allow us to group items together; this way, we can deal with fewer things and concentrate on the commonalities of the items in each class. We can talk of the properties or attributes of the items in a class and examine the relationships among properties and classes. For example, suppose we are asked to build an environmental monitoring system for a large and complex river. The monitoring equipment may involve sensors for air quality, water quality, temperature, speed, and other characteristics of the environment. But, for our purposes, we may choose to define a class called “sensor”; each item in the class has certain properties, regardless of the characteristic it is monitoring: height, weight, electrical requirements, maintenance schedule, and so on. We can deal with the class, rather than its elements, in learning about the problem context, and in devising a solution. In this way, the classes help us to simplify the problem statement and focus on the essential elements or characteristics of the problem.

We can form hierarchies of abstractions, too. For instance, a sensor is a type of electrical device, and we may have two types of sensors: water sensors and air sensors.

FIG. 13 Simple hierarchy for monitoring equipment.

Thus, we can form the simple hierarchy illustrated in FIG. 13. By hiding some of the details, we can concentrate on the essential nature of the objects with which we must deal and derive solutions that are simple and elegant. We will take a closer look at abstraction and information hiding in Sections 5, 6, and 7.

Analysis and Design Methods and Notations. When you design a program as a class assignment, you usually work on your own. The documentation that you produce is a formal description of your notes to yourself about why you chose a particular approach, what the variable names mean, and which algorithm you implemented. But when you work with a team, you must communicate with many other participants in the development process. Most engineers, no matter what kind of engineering they do, use a standard notation to help them communicate, and to document decisions. For example, an architect draws a diagram or blueprint that any other architect can under stand. More importantly, the common notation allows the building contractor to understand the architect’s intent and ideas. As we will see in Sections 4, 5, 6, and 7, there is no similar standard in software engineering, and the misinterpretation that results is one of the key problems of software engineering today.

Analysis and design methods offer us more than a communication medium. They allow us to build models and check them for completeness and consistency. Moreover, we can more readily reuse requirements and design components from previous projects, increasing our productivity and quality with relative ease.

But there are many open questions to be resolved before we can settle on a common set of methods and tools. As we will see in later sections, different tools and techniques address different aspects of a problem, and we need to identify the modeling primitives that will allow us to capture all important aspects of a problem with a single technique. Or we need to develop a representation technique that can be used with all methods, possibly tailored in some way.

User Interface Prototyping. Prototyping means building a small version of a system, usually with limited functionality, that can be used to:

• help the user or customer identify the key requirements of a system

• demonstrate feasibility of a design or approach

Often, the prototyping process is iterative: We build a prototype, evaluate it (with user and customer feedback), consider how changes might improve the product or design, and then build another prototype. The iteration ends when we and our customers think we have a satisfactory solution to the problem at hand.

Prototyping is often used to design a good user interface: the part of the system with which the user interacts. However, there are other opportunities for using prototypes, even in embedded systems (i.e., in systems where the software functions are not explicitly visible to the user).The prototype can show the user what functions will be available, regardless of whether they are implemented in software or hardware. Since the user interface is, in a sense, a bridge between the application domain and the software development team, prototyping can bring to the surface issues and assumptions that may not have been clear using other approaches to requirements analysis. We will consider the role of user interface prototyping in Sections 4 and 5.

Software Architecture. The overall architecture of a system is important not only to the ease of implementing and testing it, but also to the speed and effectiveness of maintaining and changing it. The quality of the architecture can make or break a system; indeed, Shaw and Garlan (1996) present architecture as a discipline on its own whose effects are felt throughout the entire development process. The architectural structure of a system should reflect the principles of good design that we will study in Sections 5 and 7.

A system’s architecture describes the system in terms of a set of architectural units, and a map of how the units relate to one another. The more independent the units, the more modular the architecture and the more easily we can design and develop the pieces separately. Wasserman (1996) points out that there are at least five ways that we can partition the system into units:

1. modular decomposition: based on assigning functions to modules

2. data-oriented decomposition: based on external data structures

3. event-oriented decomposition: based on events that the system must handle

4. outside-in design: based on user inputs to the system

5. object-oriented design: based on identifying classes of objects and their interrelationships

These approaches are not mutually exclusive. For example, we can design a user inter face with event-oriented decomposition while we design the database using object- oriented or data-oriented design. We will examine these techniques in further detail in later sections. The importance of these approaches is their capture of our design experience, enabling us to capitalize on our past projects by reusing both what we have done and what we learned by doing it.

Software Process. Since the late 1980s, many software engineers have paid careful attention to the process of developing software, as well as to the products that result. The organization and discipline in the activities have been acknowledged to contribute to the quality of the software and to the speed with which it is developed. However, Wasserman notes that the great variations among application types and organizational cultures make it impossible to be prescriptive about the process itself. Thus, it appears that the software process is not fundamental to software engineering in the same way as are abstraction and modularization. (Wasserman 1996).

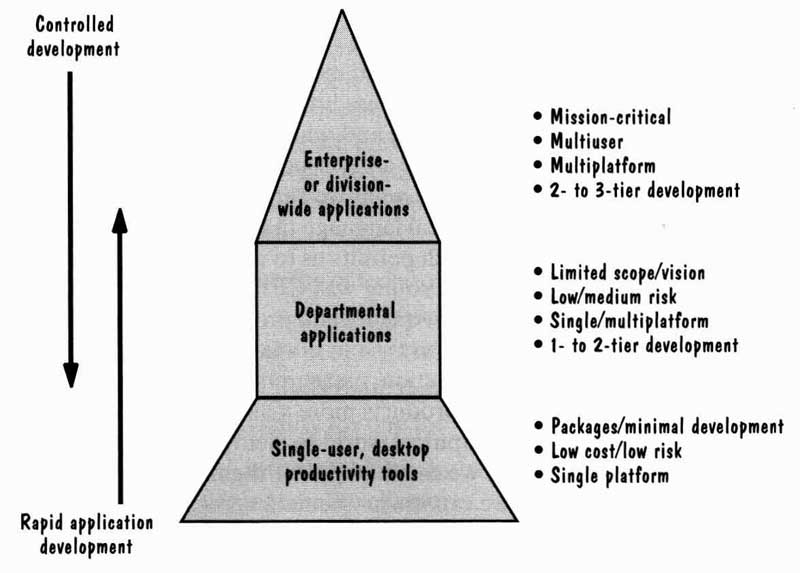

Instead, he suggests that different types of software need different processes. In particular, Wasserman suggests that enterprisewide applications need a great deal of control, whereas individual and departmental applications can take advantage of rapid application development, as we illustrate in FIG. 14.

By using today’s tools, many small and medium-sized systems can be built by one or two developers, each of whom must take on multiple roles. The tools may include a text editor, programming environment, testing support, and perhaps a small database to capture key data elements about the products and processes themselves. Because the project’s risk is relatively low, little management support or review is needed.

However, large, complex systems need more structure, checks, and balances. These systems often involve many customers and users, and development continues over a long period of time. Moreover, the developers do not always have control over the entire development, as some critical subsystems may be supplied by others or be implemented in hardware. This type of high-risk system requires analysis and design tools, project management, con figuration management, more sophisticated testing tools, and a more rigorous system of re view and causal analysis. In Section 2, we will take a careful look at several process alternatives to see how varying the process addresses different goals. Then, in Sections 12 and 13, we evaluate the effectiveness of some processes and look at ways to improve them.

FIG. 14 Differences in development (Wasserman 1996).

Reuse. In software development and maintenance, we often take advantage of the commonalities across applications by reusing items from previous development. For example, we use the same operating system or database management system from one development project to the next, rather than building a new one each time. Similarly, we reuse sets of requirements, parts of designs, and groups of test scripts or data when we build systems that are similar but not the same as what we have done before. Barnes and Bollinger (1991) point out that reuse is not a new idea, and they provide many interesting examples of how we reuse much more than just code.

Prieto-Diaz (1991) introduced the notion of reusable components as a business asset. Companies and organizations invest in items that are reusable and then gain quantifiable benefit when those items are used again in subsequent projects. However, establishing a long-term, effective reuse program can be difficult, because there are several barriers:

• It is sometimes faster to build a small component than to search for one in a repository of reusable components.

• It may take extra time to make a component general enough to be reusable easily by other developers in the future.

• It is difficult to document the degree of quality assurance and testing that have been done, so that a potential reuser can feel comfortable about the quality of the component.

• It is not clear who is responsible if a reused component fails or needs to be updated.

• It can be costly and time-consuming to understand and reuse a component writ ten by someone else.

• There is often a conflict between generality and specificity.

We will look at reuse in more detail in Section 12, examining several examples of successful reuse.

Measurement. Improvement is a driving force in software engineering research: improving our processes, resources, and methods so that we produce and maintain better products. But sometimes we express improvement goals generally, with no quantitative description of where we are and where we would like to go. For this reason, software measurement has become a key aspect of good software engineering practice. By quantifying where we can and what we can, we describe our actions and their out comes in a common mathematical language that allows us to evaluate our progress. In addition, a quantitative approach permits us to compare progress across disparate projects. For example, when John Young was CEO of Hewlett-Packard, he set goals of “10X,” a tenfold improvement in quality and productivity, for every project at Hewlett-Packard, regardless of application type or domain (Grady and Caswell 1987).

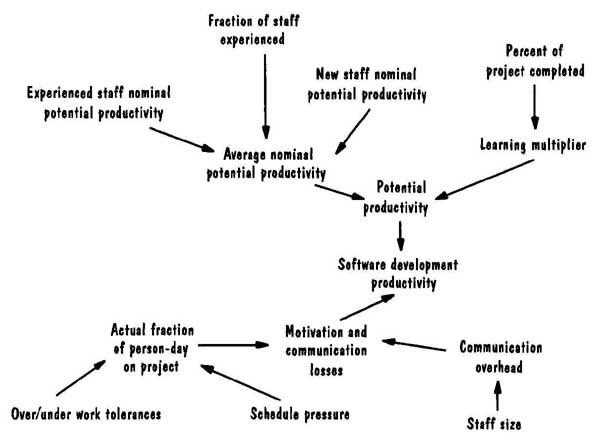

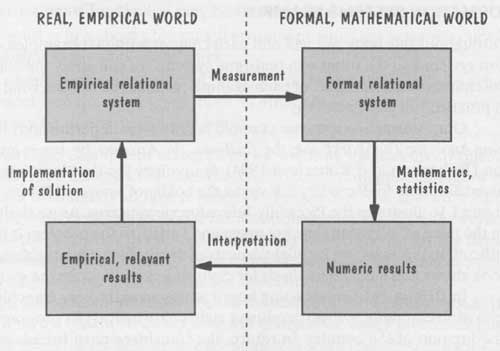

At a lower level of abstraction, measurement can help to make specific characteristics of our processes and products more visible. It is often useful to transform our understanding of the real, empirical world to elements and relationships in the formal, mathematical world, where we can manipulate them to gain further understanding. As illustrated in FIG. 15, we can use mathematics and statistics to solve a problem, look for trends. or characterize a situation (such as with means and standard deviations). This new information can then be mapped back to the real world and applied as part of a solution to the empirical problem we are trying to solve. Throughout this guide, we will see examples of how measurement is used to support analysis and decision making.

FIG. 15 Using measurement to help find a solution.

Tools and Integrated Environments. For many years, vendors touted CASE (Computer-Aided Software Engineering) tools, where standardized, integrated development environments would enhance software development. However, we have seen how different developers use different processes, methods, and resources, so a unifying approach is easier said than done.

On the other hand, researchers have proposed several frameworks that allow us to compare and contrast both existing and proposed environments. These frameworks permit us to examine the services provided by each software engineering environment and to decide which environment is best for a given problem or application development.

One of the major difficulties in comparing tools is that vendors rarely address the entire development life cycle. Instead, they focus on a small set of activities, such as de sign or testing, and it is up to the user to integrate the selected tools into a complete development environment. Wasserman (1990) has identified five issues that must be addressed in any tool integration:

1. platform integration: the ability of tools to interoperate on a heterogeneous network

2. presentation integration: commonality of user interface

3. process integration: linkage between the tools and the development process

4. data integration: the way tools share data

5. control integration: the ability for one tool to notify and initiate action in another

In each of the subsequent sections of this guide, we will examine tools to support the activities and concepts we describe in the section.

You can think of the eight concepts described here as eight threads woven through the fabric of this guide, tying together the disparate activities we call software engineering. As we learn more about software engineering, we will revisit these ideas to see how they unify and elevate software engineering as a scientific discipline.

9. INFORMATION SYSTEMS EXAMPLE

Throughout this guide, we will end each section with two examples, one of an information system and the other of a real-time system. We will apply the concepts described in the section to some aspect of each example, so that you can see what the concepts mean in practice, not just in theory.

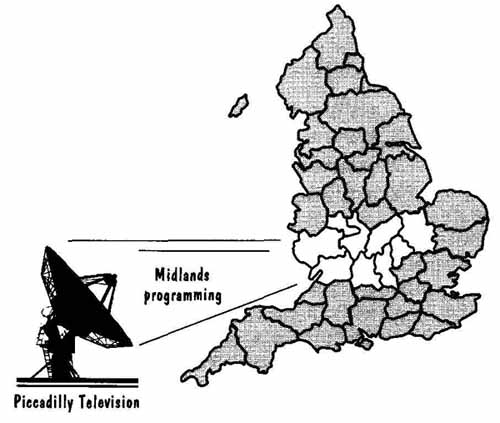

Our information systems example is drawn (with permission) from Complete Systems Analysis: The Workbook, the Textbook, the Answers, by James and Suzanne Robert son (Robertson and Robertson 1994). It involves the development of a system to sell advertising time for Piccadilly Television, the holder of a regional British television franchise. FIG. 16 illustrates the Piccadilly Television viewing area. As we shall see, the constraints on the price of television time are many and varied, so the problem is both interesting and difficult. In this guide, we highlight aspects of the problem and its solution; the Robertsons’ guide shows you detailed methods for capturing and analyzing the system requirements.

In Britain, the broadcasting board issues an eight-year franchise to a commercial television company, giving it exclusive rights to broadcast its programs in a carefully de fined region of the country. In return, the franchisee must broadcast a prescribed balance of drama, comedy, sports, children’s and other programs. Moreover, there are restrictions on which programs can be broadcast at which times, as well as rules about the content of programs and commercial advertising.

FIG. 16 Piccadilly Television franchise area.

A commercial advertiser has several choices to reach the Midlands audience: Piccadilly, the cable channels, and the satellite channels. However, Piccadilly attracts most of the audience. Thus, Piccadilly must set its rates to attract a portion of an advertiser’s national budget. One of the ways to attract an advertiser’s attention is with audience ratings that reflect the number and type of viewers at different times of the day. The ratings are reported in terms of program type, audience type, time of day, television company, and more. But the advertising rate depends on more than just the ratings. For example, the rate per hour may be cheaper if the advertiser buys a large number of hours. Moreover, there are restrictions on the type of advertising at certain times and for certain programs. For example;

• Advertisements for alcohol may be shown only after 9 P.M.

• If an actor is in a show, then an advertisement with that actor may not be broad cast within 45 minutes of the show.

• If an advertisement for a class of product (such as an automobile) is scheduled for a particular commercial break, then no other advertisement for something in that class may be shown during that break.

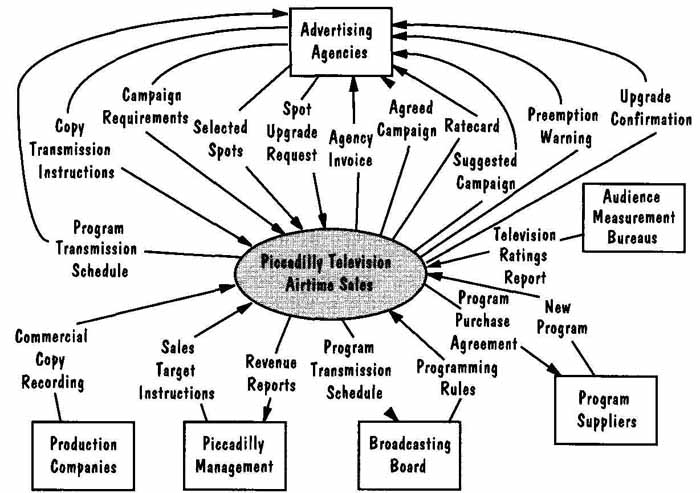

As we explore this example in more detail, we will note the additional rules and regulations about advertising and its cost. The system context diagram in FIG. 17 shows us the system boundary and how it relates to these rules. The shaded oval is the Piccadilly system that concerns us as our information system example the system boundary is simply the perimeter of the oval. The arrows and boxes display the items that can affect the working of the Piccadilly system, but we consider them only as a collection of inputs and outputs, with their sources and destinations, respectively.

In later sections, we will make visible the activities and elements inside the shaded oval (i.e., within the system boundary). We will examine the design and development of this system using the software engineering techniques that are described in each section.

10. REAL-TIME EXAMPLE

Our real-time example is based on the embedded software in the Ariane-5, a space rock et belonging to the European Space Agency (ESA). On June 4, 1996, on its maiden flight, the Ariane-5 was launched and performed perfectly for approximately 40 seconds. Then, it began to veer off course. At the direction of an Ariane ground controller, the rocket was destroyed by remote control. The destruction of the uninsured rocket was a loss not only of the rocket itself, but also of the four satellites it contained; the total cost of the disaster was $500 million (Newsbytes home page 1996; Lions et al. 1996).

FIG. 17 Piccadilly context diagram showing system boundary. (Robertson

and Robertson 1994).

Software is involved in almost all aspects of the system, from the guidance of the rocket to the internal workings of its component parts. The failure of the rocket and its subsequent destruction raise many questions about software quality. As we will see in later sections, the inquiry board that investigated the cause of the problem focused on software quality and its assurance. In this section, we look at quality in terms of the business value of the rocket.

There were many organizations with a stake in the success of Ariane-5: the ESA, the Centre National d’Etudes Spatiales (CNES, the French space agency in overall command of the Ariane program), and 12 other European countries. The rocket’s loss was another in a series of delays and problems to affect the Ariane program, including a nitrogen leak during engine testing in 1995 that killed two engineers. However, the June incident was the first whose cause was directly attributed to software failure.

The business impact of the incident went well beyond the $500 million in equipment. In 1996, the Ariane-4 rocket and previous variants held more than half of the world’s launch contracts, ahead of American, Russian, and Chinese launchers. Thus, the credibility of the program was at stake, as well as the potential business from future Ariane rockets.

The future business was based in part on the new rocket’s ability to carry heavier pay loads into orbit than previous launchers could. Ariane-5 was designed to carry a single satellite up to 6.8 tons or two satellites with a combined weight of 5.9 tons. Further development work hoped to add an extra ton to the launch capacity by 2002. This increased carrying capacity has clear business advantages; often, operators reduce their costs by sharing launches, so Ariane can offer to host several companies’ payloads at the same time.

Consider what quality means in the context of this example. The destruction of Ariane-5 turned out to be the result of a requirement that was misspecified by the customer. In this case, the developer might claim that the system is still high quality; it was just built to the wrong specification. Indeed, the inquiry board formed to investigate the cause and cure of the disaster noted that

The Board’s findings are based on thorough and open presentations from the Ariane-5 project teams, and on documentation which has demonstrated the high quality of the Ariane 5 program as regards engineering work in general and completeness and traceability of documents. (Lions et al. 1996)

But from the user’s and customer’s point of view, the specification process should have been good enough to identify the specification flaw and force the customer to correct the specification before damage was done. The inquiry board acknowledged that:

The supplier of the SRI subsystem in which the cause of the problem was eventually located] was only following the specification given to it, which stipulated that in the event of any detected exception the processor was to be stopped. The exception which occurred was not due to random failure but a design error. The exception was detected, but inappropriately handled because the view had been taken that software should be considered correct until it is shown to be at fault. The Board has reason to believe that this view is also accepted in other areas of Ariane-5 software design. The Board is in favor of the opposite view, that software should be assumed to be faulty until applying the currently accepted best practice methods can demonstrate that it is correct. (Lions et al. 1996)

In later sections, we will investigate this example in more detail, looking at the design, testing, and maintenance implications of the developers’ and customers’ decisions. We will see how poor systems engineering at the beginning of development led to a series of poor decisions that led in turn to disaster. On the other hand, the openness of all concerned, including ESA and the inquiry board, coupled with high-quality documentation and an earnest desire to get at the truth quickly, resulted in quick resolution of the immediate problem and an effective plan to prevent such problems in the future.

A systems view allowed the inquiry board, in cooperation with the developers, to view the Ariane-5 as a collection of subsystems. This collection reflects the analysis of the problem, as we described in this section, so that different developers can work on separate subsystems with distinctly different functions. For example:

The attitude of the launcher and its movements in space are measured by an Inertial Reference System (SRI). It has its own internal computer, in which angles and velocities are calculated on the basis of information from a “strap-down” inertial platform, with laser gyros and accelerometers. The data from the SRI are transmitted through the databus to the On-Board Computer (OBC), which executes the fight program and controls the nozzles of the solid boosters and the Vulcain cryogenic engine, via servovalves and hydraulic actuators. (Lions et al. 1996)

But the synthesis of the solution must include an overview of all the component parts, where the parts are viewed together to determine if the “glue” that holds them together is sufficient and appropriate. In the case of Ariane-5, the inquiry board suggested that the customers and developers should have worked together to find the critical software and make sure that it could handle not only anticipated but also unanticipated behavior.

This means that critical software—in the sense that failure of the software puts the mission at risk—must be identified at a very detailed level, that exceptional behavior must be confined, and that a reasonable back-up policy must take software failures into account. (Lions et al. 1996)

11. WHAT THIS SECTION MEANS FOR YOU

This section has introduced many concepts that are essential to good software engineering research and practice. You, as an individual software developer, can use these concepts in the following ways:

• When you are given a problem to solve (whether or not the solution involves soft ware), you can analyze the problem by breaking it into its component parts, and the relationships among the parts. Then, you can synthesize a solution by solving the individual subproblems and merging them to form a unified whole.

• You must understand that the requirements may change, even as you are analyzing the problem and building a solution. So your solution should be well-documented and flexible, and you should document your assumptions and the algorithms you use (so that they are easy to change later).

• You must view quality from several different perspectives, understanding that technical quality and business quality may be very different.

• You can use abstraction and measurement to help identify the essential aspects of the problem and solution.

• You can keep in mind the system boundary, so that your solution does not over lap with the related systems that interact with the one you are building.

12. WHAT THIS SECTION MEANS FOR YOUR DEVELOPMENT TEAM

Much of your work will be done as a member of a larger development team. As we have seen in this section, development involves requirements analysis, design, implementation, testing, configuration management, quality assurance, and more. Some of the people on your team may wear multiple hats, as may you, and the success of the project depends in large measure on the communication and coordination among the team members. We have seen in this section that you can aid the success of your project by selecting

• a development process that is appropriate to your team size, risk level, and application domain

• tools that are well-integrated and support the type of communication your project demands

• measurements and supporting tools to give you as much visibility and under standing as possible

13. WHAT THIS SECTION MEANS FOR RESEARCHERS

Many of the issues discussed in this section are good subjects for further research. We have noted some of the open issues in software engineering, including the need to find

• the right levels of abstraction to make the problem easy to solve

• the right measurements to make the essential nature of the problem and solution visible and helpful

• an appropriate problem decomposition, where each sub-problem is solvable

• a common framework or notation to allow easy and effective tool integration, and to maximize communication among project participants

In later sections, we will describe many techniques. Some have been used and are well-proven software development practices, whereas others are proposed and have only be demonstrated on small, “toy,” or student projects. We hope to show you how to improve what you are doing now and at the same time to inspire you to be creative and thoughtful about trying new techniques and processes in the future.

14. TERM PROJECT

It is impossible to learn software engineering without participating in developing a soft ware project with your colleagues. For this reason, each section of this guide will present information about a term project that you can perform with a team of classmates. The project, based on a real system in a real organization, will allow you to address some of the very real challenges of analysis, design, implementation, testing, and maintenance. In addition, because you will be working with a team, you will deal with issues of team diversity and project management.

The term project involves the kinds of loans you might negotiate with a bank when you want to buy a house. Banks generate income in many ways, often by borrowing money from their depositors at a low interest rate and then lending that same money back at a higher interest rate in the form of bank loans. However, long-term property loans, such as mortgages, typically have terms of up to 15,25, or even 30 years. That is, you have 15,25, or 30 years to repay the loan: the principal (the money you originally borrowed) plus interest at the specified rate. Although the income from interest on these loans is lucrative, the loans tie up money for a long time, preventing the banks from using their money for other trans actions. Consequently, the banks often sell their loans to consolidating organizations, taking less long-term profit in exchange for freeing the capital for use in other ways.

The application for your term project is called the Loan Arranger. It is fashioned on ways in which a (mythical) Financial Consolidation Organization, FCO, handles the loans it buys from banks. The consolidation organization makes money by purchasing loans from banks and selling them to investors. The bank sells the loan to FCO, getting the principal in return. Then, as we shall see, FCO sells the loan to investors who are willing to wait longer than the bank to get their return.

To see how the transactions work, consider how you get a loan (called a “mort gage”) for a house. You may purchase a $150,000 house by paying $50,000 as an initial payment (called the “down payment”) and taking a loan for the remaining $100,000. The “terms” of your loan from the First National Bank may be for 30 years at 5% interest. This terminology means that the First National Bank gives you 30 years (the term of the loan) to pay back the amount you borrowed (the “principal”) plus interest on whatever you do not pay back right away. For example, you can pay the $100,000 by making a payment once a month for 30 years (that is, 360 “installments” or “monthly payments”), with interest on the unpaid balance. If the initial balance is $100,000, the bank calculates your monthly payment using the amount of principal, the interest rate, the amount of time you have to pay off the loan, and the assumption that all monthly payments should be the same amount.

For instance, suppose the bank tells you that your monthly payment is to be $536.82. The first month’s interest is (1/12) X (.05) X ($100,000), or $416.67. The rest of the payment ($536.82 — 416.67) pays for reducing the principal: $120.15. For the second month, you now owe $100,000 minus the $120.15, so the interest is reduced to (1/12) X (.05) X ($100,000 — 120.15), or $416.17.Thus, during the second month, only $416.17 of the monthly payment is interest, and the remainder, $120.65, is applied to the remaining principal. Over time, you pay less interest and more toward reducing the remaining balance of principal, until you have paid off the entire principal and own your property free and clear of any encumbrance by the bank.

First National Bank may sell your loan to FCO some time during the period when you are making payments. First National negotiates a price with FCO. In turn, FCO may sell your loan to ABC Investment Corporation. You still make your mortgage payments each month, but your payment goes to ABC, not First National. Usually, FCO sells its loans in “bundles,” not individual loans, so that an investor buys a collection of loans based on risk, principal involved, and expected rate of return. In other words, an investor such as ABC can contact FCO and specify how much money it wishes to invest, for how long, how much risk it is willing to take (based on the history of the people or organizations paying back the loan), and how much profit is expected.

The Loan Arranger is an application that allows a FCO analyst to select a bundle of loans to match an investor’s desired investment characteristics. The application accesses information about loans purchased by FCO from a variety of lending institutions.

When an investor specifies investment criteria, the system selects the optimal bundle of loans that satisfies the criteria. While the system will allow some advanced optimizations, such as selecting the best bundle of loans from a subset of those available (for instance, from all loans in Massachusetts, rather than from all the loans available), the system will still allow an analyst to manually select loans in a bundle for the client. In addition to bundle selection, the system also automates information management activities, such as updating bank information, updating loan information, and adding new loans when banks provide that information each month.

We can summarize this information by saying that the Loan Arranger system al lows a loan analyst to access information about mortgages (home loans, described here simply as “loans”) purchased by FCO from multiple lending institutions with the intention of repackaging the loans to sell to other investors. The loans purchased by FCO for investment and resale are collectively known as the loan portfolio. The Loan Arranger system tracks these portfolio loans in its repository of loan information. The loan analyst may add, view, update, or delete loan information about lenders and the set of loans in the portfolio. Additionally, the system allows the loan analyst to create “bundles” of loans for sale to investors. A user of Loan Arranger is a loan analyst who tracks the mortgages purchased by FCO.

In later sections, we will explore the system’s requirements in more depth. For now, if you need to brush up on your understanding of principal and interest, you can review your old math guides, or look at http://www.interest.comlhugh/calc/formula.html.

15. KEY REFERENCES

You can find out about software faults and failures by looking in the Risks Forum, moderated by Peter Neumann. A paper copy of some of the Risks is printed in each issue of Software Engineering Notes, published by the Association for Computer Machinery’s Special Interest Group on Software Engineering (SIGSOVF).The Risks archives are available on ftp.sri.com, cd risks. The Risks Forum newsgroup is available online at comp.risks or you can subscribe via the automated list server at risks-request@CSL.sri.com.

You can find out more about the Ariane-5 project from the European Space Agency’s Web site. A copy of the joint ESA/CNES press release describing the mission failure (in English) is here. A French version of the press re lease is here . An electronic copy of the Ariane-5 Flight 501 Failure Report is here.

Leveson and Turner (1993) describe the Therac software design and testing problems in careful detail.

The January 1996 issue of IEEE Software is devoted to software quality. In particular, the introductory article by Kitchenham and Pfleeger (1996) describes and critiques several quality frameworks, and the article by Dromey (1996) discusses how to define quality in a measurable way.

For more information about the Piccadilly Television example, you may consult (Robertson and Robertson 1994) or explore the Robertson’s approach to requirements at systemsguild.com.

16. EXERCISES

1. The following article appeared in the Washington Post (Associated Press 1996):

===== ====

PILOT’S COMPUTER ERROR CITED IN PLANE CRASH.

AMERICAN AIRLINES SAYS ONE-LETTER CODE WAS REASON JET HIT MOUNTAIN IN COLOMBIA.

Dallas, Aug. 23—The captain of an American Airlines jet that crashed in Colombia last December entered an incorrect one-letter computer command that sent the plane into a mountain, the airline said today.

The crash killed all but four of the 163 people aboard.

American’s investigators concluded that the captain of the Boeing 757 apparently thought he had entered the coordinates for the intended destination, Cali.

But on most South American aeronautical charts, the one-letter code for Cali is the same as the one for Bogota, 132 miles in the opposite direction.

The coordinates for Bogota directed the plane toward the mountain, according to a letter by Cecil Ewell, American’s chief pilot and vice president for flight. The codes for Bogota and Cali are different in most computer databases, Ewell said.

American spokesman John Hotard confirmed that Ewell’s letter, first reported in the Dallas Morning News, is being delivered this week to all of the airline’s pilots to warn them of the coding problem.

American’s discovery also prompted the Federal Aviation Administration to issue a bulletin to all airlines, warning them of inconsistencies between some computer databases and aeronautical charts, the newspaper said.

The computer error is not the final word on what caused the crash. The Colombian government is investigating and is expected to release its findings by October.

Pat Carisco, spokesman for the National Transportation Safety Board, said Colombian investigators also are examining factors such as flight crew training and air traffic control.

The computer mistake was found by investigators for American when they compared data from the jet’s navigation computer with information from the wreckage, Ewell said.

The data showed the mistake went undetected for 66 seconds while the crew scrambled to follow an air traffic controller’s orders to take a more direct approach to the Cali airport.

Three minutes later, while the plane still was descending and the crew trying to figure out why the plane had turned, it crashed.

Ewell said the crash presented two important lessons for pilots.

“First of all, no matter how many times you go to South America or any other place—the Rocky Mountains—you can never, never, never assume anything,” he told the newspaper. Second, he said, pilots must understand they can’t let automation take over responsibility for flying the airplane.

= = = = =

Is this article evidence that we have a software crisis? How is aviation better off because of software engineering? What issues should be addressed during software development so that problems like this will be prevented in the future?

2. Give an example of problem analysis where the problem components are relatively simple, but the difficulty in solving the problem lies in the interconnections among subproblem components.

3. Explain the difference between errors, faults, and failures. Give an example of an error that leads to a fault in the requirements; the design; the code. Give an example of a fault in the requirements that leads to a failure; a fault in the design that leads to a failure; a fault in the test data that leads to a failure.

4. Why can a count of faults be a misleading measure of product quality?

5. Many developers equate technical quality with overall product quality. Give an example of a product with high technical quality that is not considered high quality by the customer. Are there ethical issues involved in narrowing the view of quality to consider only technical quality? Use the Therac-25 example to illustrate your point.

6. Many organizations buy commercial software, thinking it is cheaper than developing and maintaining software in-house. Describe the pros and cons of using COTS software. For example, what happens if the COTS products are no longer supported by their vendors? What must the customer, user, and developer anticipate when designing a product that uses COTS software in a large system?

7. What are the legal and ethical implications of using COTS software? Of using subcon tractors? For example, who is responsible for fixing the problem when the major system fails as a result of a fault in COTS software? Who is liable when such a failure causes harm to the users, directly (as when the automatic brakes fail in a car) or indirectly (as when the wrong information is supplied to another system, as we saw in Exercise 1). What checks and balances are needed to ensure the quality of COTS software before it is integrated into a larger system?

8. The Piccadilly Television example, as illustrated in FIG. 17, contains a great many rules and constraints. Discuss three of them and explain the pros and cons of keeping them out side the system boundary.

9. When the Ariane-5 rocket was destroyed, the news made headlines in France and else where. Liberation, a French newspaper, called it “A 37-billion-franc fireworks display” on the front page. In fact, the explosion was front-page news in almost all European news papers and headed the main evening news bulletins on most European TV networks. By contrast, the invasion by a hacker of Panix, a New York-based Internet provider, forced the Panix system to close down for several hours. News of this event appeared only on the front page of the business section of the Washington Post. What is the responsibility of the press when reporting software-based incidents? How should the potential impact of software failures be assessed and reported?

PREV. | NEXT